Projects

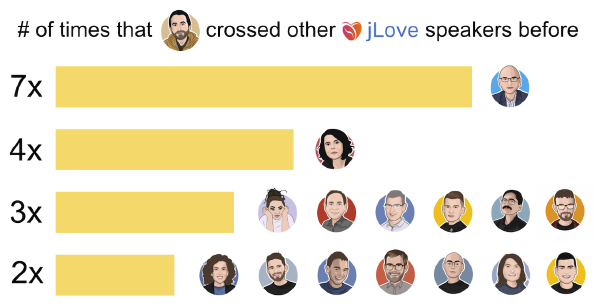

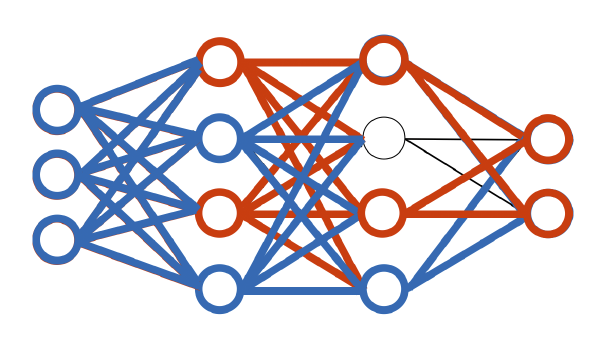

2019 - Raspberry PI AI

🚀 GitHub repo with code and dataset. 🎙 Conference talk from this project. 📖 Talk slides. 🐰 My blog post on Deep Learning fundamentals. In spring 2019, I was working in an office next the Amsterdam airport, and I could see lots of planes passing by, very close. Such view never stopped exciting me, so the idea: write from scratch and train a deep learning network to alert me when there is a plane passing by. Initial setup... ...and final setup. Notice the plane. The little PI would take pictures that looked like the below examples. For the purposes of this project, these images belong to two classes: There is a plane on the bridge. Or, No plane is on the bridge. Class 1: a plane is on the bridge. Class 2: no plane on the bridge. The actions for this project are: Implement a binary classifier Convolutional Neural Network. Deploy it on the Raspberry PI and Build the entire pipeline (take pictures, classify them, tweet them…) Evaluate the performance of the network and improve it. Implement a binary classifier The network I chose to build is a Convolutional Neural Network, starting from a classic LeNet model and tweaking for my own case. I tried both the TensorFlow Java API and Deeplearning4j. I liked the latter a lot more as the JVM is the primary target; TensorFlow felt too ‘phyton-esque’. All relevant code can be found in the GitHub repo. The following snippet is the part where I bring together all the layers of the neural network. // creates the actual neural network def getNetworkConfiguration: MultiLayerConfiguration = { new NeuralNetConfiguration.Builder() .seed(seed) .l2(0.0005) .weightInit(WeightInit.XAVIER) .updater(new Adam(1e-3)) .list .layer(0, new ConvolutionLayer.Builder(5, 5) .nIn(depth) // nIn and nOut specify depth. nIn here is the nChannels and nOut is the number of filters to be applied .stride(1, 1) .nOut(20) .activation(Activation.IDENTITY) .build) .layer(1, new SubsamplingLayer.Builder(PoolingType.MAX) .kernelSize(2, 2) .stride(2, 2) .build) .layer(2, new ConvolutionLayer.Builder(5, 5) .stride(1, 1) // Note that nIn need not be specified in later layers .nOut(50) .activation(Activation.IDENTITY) .build) .layer(3, new SubsamplingLayer.Builder(PoolingType.MAX) .kernelSize(2, 2) .stride(2, 2) .build) .layer(4, new DenseLayer.Builder() .activation(Activation.RELU) .nOut(500) .build) .layer(5, new OutputLayer.Builder(LossFunctions.LossFunction.MCXENT) // to be used with Softmax .nOut(numClasses) .activation(Activation.SOFTMAX) // because I have two classes: plane or no-plane .build) .setInputType(InputType.convolutional(outputHeight, outputWidth, depth)) .build } Build the pipeline I used Akka Streams to bring everything together in a powerful and elegant way. The largely untold truth is that the strictly AI parts of AI projects are just a tiny part of it. So much work goes into the many IO operations, take an image, resize it, copy it around, classify it, tweet it… All of these operations take time and can fail. This is a diagram where the three most important flows are represented. The only blocks about AI are the blue ones. I cannot stress enough how powerful Akka Streams is. Typed, composable, integrations for any machinery piece you might need (FTP? Kafka? TCP? File operations?) and just so much fun to use. Training the network requires a dataset first. In my case, this meant collecting about 16.000 images, look at each one of them and label it: ‘PLANE’ or ‘NOPLANE’. A painstaking work that should discourage anyone to follow steps, but at the same crucial for humanity as we’ll see shortly. Evaluate the performance of the network During the training phase, at each training epoch your trainer will use the test images to see how the network performs against them. That is the first indication of your model’s capabilities. The real world is another thing though. If you want to evaluate your network performance in the open field, you need to save the original image together with the network’s prediction. Only then you can look at the image and see if the AI got it right. It’s more painstaking work, but at least it comes with the benefit that you can use the human-validated images to enrich your dataset and train your model again. The most important parameters I looked at where the Precision and the Recall, both measures between 0.0 and 1.0. Precision: shows how well the model avoids false positives. Recall: shows how well our model avoids false negatives. The image above tells us that while the Precision is very high (some days even 1.0), the Recall is overall not that great. Few false positives mean that when the network believes that there is a plane in the picture, usually there is one. At the same time, there are a lot of false negatives: there was a plane, but the network didn’t see it. Which is fine: in fact, the network’s behaviour is always a compromise. In my case, I am happy with having few false positives and many false negatives, because what I care about is that the tweeted images do contain a plane. Not a big deal if the network misses some planes. Different cases will call for a different compromise. The image below show common cases where the network uncorrectly declares that there is no plane: the different weather conditions confuse the network greatly, but also cases where a plane is only partially visible. Images where the network didn't see any plane. Bonus: AI Bias and what to do about it There is another important factor behind the fluctuating network performance: AI bias. The network was traine in the Netherlands, where the national air company sports blue planes. So the model grew up heavily skewed towards blue planes. Train a network in Amsterdam and it will think that all planes are blue. This shows in the network clearly able to see blue planes much better than other planes. Even such a playful project like this one turned out to be plagued by AI bias. The only solution to this problem is a balanced and heterogeneous dataset. This is a VERY difficult challenge. It seems clear to me that datasets should be put together by different people (or entities) than those who actually implement the network. The task of compiling a balanced dataset is incredibly challenging, and we have all seen that the repercussions can be tragic. Think sensible domains like health, or any interaction with human rights in general. Security, job candidate screening and so forth, where racial or gender bias (just to name two) could creep in at any point. The only solution is tasking the right people with assembling a dataset. In my view, this is one of the most difficult challenges that humanity faces already.

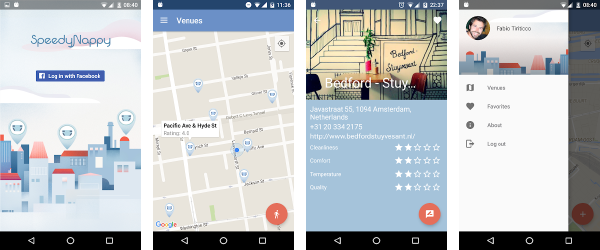

2016 - SpeedyNappy

SpeedyNappy is a side project that I run with my friend and AWESOME illustrator Chiara Vercesi. The mobile app lets users: Log in with Facebook credentials Search for the closest venue with changing table Get directions to that venue via the Google Maps API Rate the venue and add details about it Insert a new venue Curate a list of favorite venues The server was a CRUD service built with Scala and Play, deployed on CleverCloud. The client was the most interesting part to develop. I wrote a custom UI rating component using the stars icons from the Material Design pack.

2018 - Ace & Tate

Ace & Tate is a very prominent Dutch eye-wear company. I worked there for 4 months in a team of three, with the task of going live with a new backend service powered by Scala and Lagom (a microservices framework developed by Lightbend). The deployment was to be on Google Cloud and their relatively recent managed Kubernetes service GKE. The company was very young and so was the average age of the employees. We techies, with our very minimalist desks, were surrounded by design teams working on new glass models, store enrichment, posters or advertisement. Definitely one of the most colorful office I have ever set foot in. Four months are all it took for this little success story. We were able to go live and replace the legacy system, an organically grown patchwork using both Magento (PHP) and Solidus (Ruby). Other important items, in random order, have been: Event Storming Domain Driven Design Always-on streams with Akka Stream (mostly for integrations with third party systems) Hexagonal architecture Clustering of services At the end of 2018 I spoke at a lot of conferences, together with my buddy Adam Sandor, and one of the most glamorous pictures of us on stage was taken at the Google DevFest. I was wearing the Ace & Tate sweater 🙂 I did have an amazing time at Ace & Tate, a really fun project in a fun environment. Another perk was that their wonderful office was only a brisk 6-minute bicycle ride from my house!

2016 - The Things Network

I am very fascinated by the IoT movement. This is why in 2015 I became a regular attendee of the early sessions of The Things Network, a working group based on the nascent LoraWAN technology. The goal was to create a distributed network of LoraWAN sensors spread around the city, powered by a backbone that would allow any number of devices to connect to it and exchange payload. A sort of alternative, local Internet if you want. The first meetings were held in the basement of the mythical Rockstart venue on Herengracht in Amsterdam. The atmosphere was that of a total underground hacking team, tinkering around with antennas and electrical components of all kinds. Back then we had a MQTT broker that people could use to check the data produced by their devices and collected by our LoraWAN network. I wrote an Android SDK to retrieve data from such server and created a sample app to showcase its use. An underground basement to tinker away The sample TTN App for the TTN Android SDK It was a lot of fun to work there for a while, but soon it became clear that despite the proclaimed openness and crowd-ownership, the actual governance was strictly controlled by the two founders. The Things Industries, a for-profit organization sister of The Things Network, was created some time later. There is nothing wrong with for-profit ventures; the only issue is claiming a project to be open when it factually isn’t. From the same period is my blog post about my first steps with LoraWAN and SodaQ.

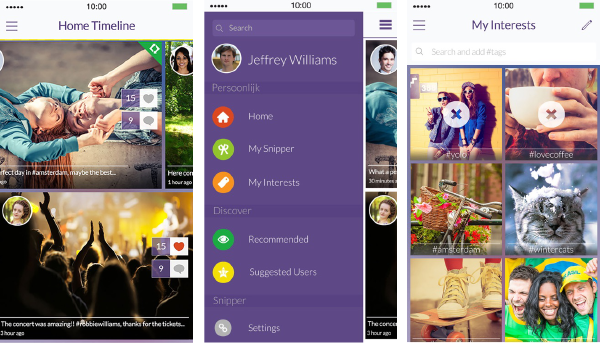

2012 to 2013 - Snipper

In 2012 I left TomTom after almost-five wonderful years. A quite incredible week ensued. Started on Monday morning at the Dutch branch of Unic, a web agency. On Tuesday, got contacted by another ex-TomTommer who was working at a local startup. Met him and his other colleagues on Wednesday and Thursday. Walked into the Unic office on Friday morning and quit. Started on Monday at the other startup. The issue with Unic was mainly their tech stack, based around a monolithical Java eCommerce frameworkcalled Hybris. The resignation talk I had on Friday with my manager there was legendary. He started yelling, accusing me of lying, at some point he showed me his wedding ring saying "..I have a pact with my wife and I don’t sleep with other women!". He clearly missed the points that I hadn’t married him and that the ‘trial period’ goes for employees as well as employers. A few months later, that Unic Dutch branch declared bankrupcy. That is the story of how I became the 4th employee at Snipper. Housed at a breathtaking location in Amsterdam, the company’s main product was a social media focused on video sharing. They had had some initial encouraging success and wanted to boost their tech. The idea was clearly worth the efforts - just see the place that Snapchat, Vine or TikTok hold today. My main task there was working on the Android native app. The current version had been built by an external agency and we embarked in rewriting the whole thing, server included. The images below show both previous and newer version (they might be iOS screenshots, the difference was really minimal.) Back then, Android was still relatively new; the battle with iOS was raging. I think the latest Android API then was Ice Cream Sandwich. We also rebuilt the backend with Java and Tomcat. The legacy server was built using ColdFusion. For the little I’ve seen, it was a way to create server logic using a language similar to XML. Ew. The app had its popularity ups and downs, but another product came to shine: the SnipperWall. It was basically a white-label aggregator webpage that would show in real-time the ‘conversation’ around a set of hashtags, users and topic on a bunch of social platform like Twitter, Snipper itself, Instagram and so forth. The company founders were an absolute sales-powerhouse and they managed to close contracts with great partners in the entertainment world, including local celebrities, the Ajax Arena stadium, huge concert venues and the TV show ‘The Voice Of Holland’.

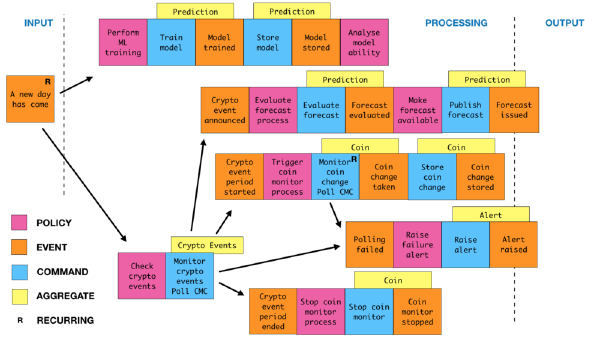

2018 - Caterina

Caterina, named after my niece, was a project to collect data about events related to cryptocurrencies and their price fluctuation. Then maybe I could find some reproducible patterns to reliably predict it and short or long it. I wanted to identify a function such that F(cryptocurrency, event category) => (price variation around the event, confidence) For example, F(ETH, community event) => (+10%, 90%). Beside getting rich, this project combined three goals: Identify patterns in the crypto market dynamics. Applying the Reactive Architecture principles in a new project. Practicing development and maintenance of a relatively simple data pipeline. I wrote a lot about this project, the full writeup is accessible here. I also toured with a talk about this project, called ‘Tame Crypto Events With Streaming’, recording here. As a brief recap, I didn’t get any richer 😅 I enjoyed very much doing things the right way, starting with proper modeling of the problem space using Event Storming: Next, model the flow to DDD objects and bounded contexts implement the model in Scala, Akka and Akka Streams put everything in containers and run it on Google Cloud’s Kubernetes. The logical steps from bounded contexts to streaming are shown in this image, and a little code is below: The top stream (the one just below the ‘CRYPTO EVENTS FLOW’ in the image above) looked like this: Source // emits a GiveToken object once a day .tick(0.seconds, 1.day, GiveToken) // asks the Token Actor the auth token, is received as a string .mapAsync(1)(msg => (tokenActor ? msg) (3.seconds).mapTo[String]) // passes it to the event request flow, implmented separately .via(eventRequestFlow) // unmarshal the results and maps it to my internal CoinEvent object .map(eventListUnmarshaller) .map(_.flatMap(EventAPI.toCoinEvents)) // publishes the result on Google Pub/Sub, implemented separately with Alpakka .via(eventPubSubFlow) // forwards the event list to the Coin Actor .runForeach(ev => coinActor ! ev) After a few months of data collection, it was time to perform some data analysis; you can read the outcome of it here, with lots of colored graphs. For instance, a promising first observation that the mean price of a coin in the two days after the event went up in almost all cases: One issue is the extreme volatility of cryptocurrencies. For example here is the price fluctuation of ETH around an ETH conference in November 2018: ETH price fluctuation around a conference event Ideally, you want to buy and sell as per the green line, and avoid the red operation. If you are curious, read more of my conclusions! All in all, this project was a super learning experience, touching a data project from A to Z and gave me a chance to speak at lots of different conferences.

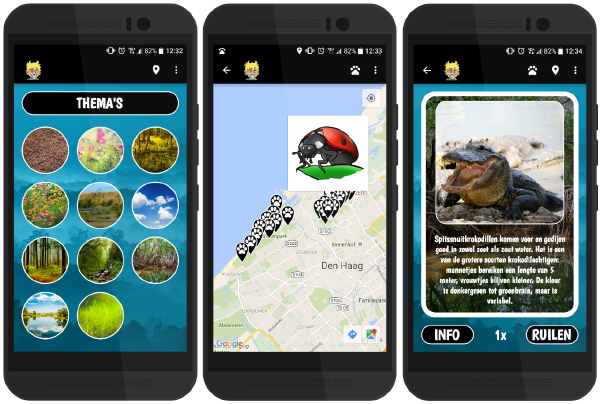

2015 to 2016 - Perfect Earth Animals

Perfect Earth Animals is an educational app for kids. Centered around the discovery of biodiversity, it enabled players to walk in a park and used locations services collect disseminated virtual cards of endangered animals and learn about them. Animals were divided in themes corresponding to different natural areas such as Rainforest, Desert, Oceans and so on. The system was quite straightforward: a Play app built in Scala would store and serve all data from a persistence layer in MongoDB. An Angular admin website allowed the client to insert new content, such as new animal cards, images or coordinates where a card would be available. This project was incredibly special as it has been the one and only paying customer ever for my own startup CloudMatch! Christian, the mind behind the entire Perfect Earth ecosystem, saw the playful potential of exchanging items with gestures across touchscreens and believed in it enough to make it a unique feature of his card game. I’m not sure if I ever told him, but I am very grateful for having believed in it. Below you can see a recording of an excited myself showcasing a working prototype with the exchange mechanics in place. The app was visually beautiful, thanks to the gorgeous illustrations. It was a lot of fun to build too. The current app is an improvement over the one that I built and I don’t know how much of the logic has stayed, either on the servers or on the mobile client. What I know for sure is that CloudMatch is no longer part of it.

2016 to 2018 - Weeronline

Weeronline is one of the leading weather forecast platforms in the Netherlands. ‘Weeronline’ literally translates to ‘Weather online’. Mostly ad-funded, Weeronline is a very successful business. In fact, I wsa quite shocked to learn the yearly revenues, but it all made sense when I realized that weather services are most valuable in places where the weather is very uncertain and fluctuating. For example: in Sicily, where you would expect the sun to shine on most days throughout the day, you would rarely check a forecast app. The Netherlands, with its famously unpredictable ‘four seasons in one day’ weather, these apps are literally among the most useful of all. During my years at Weeronline I covered three different roles and each one gets its own section. Java Android Developer I worked on the release of the second version of the Android app. My main achievement was introducing dependency injection with Dagger, which lead to a cleaner codebase. Beside that huge effort, the rest was the usual app development that you would expect: HTTP interaction with data sources, managing latency and errors Asset management for different screens and resolutions UI navigation Scala Backend Developer The company Weeronline was part of a bigger German holding called HolidayCheck (which sold it in 2020). They used Scala as main backend language and there was an effort to unify the tech stack. The challenge was immense as Weeronline’s legacy servers were written in Visual Basic 6, which nobody really understood. One of my first tasks was indeed to map all that incredible legacy, spread across dozens of servers managed by a local provider. The result of that effort were the infamous ‘lanes’. I covered an entire wall with these flows linking data sources, processing logic, outcomes and their business value. Johnny is mind-blown by the lanes. Mapping those ETL flows was a huge effort, but it paid off in terms of visibility and prioritization of the work to do. Two features emerged as the most valuable ones within the system: the 2-hour precipitation radar and the global forecast up to 14 days in the future. Had the current implementations gone down, that would have meant great losses for the company. The precipitation radar was the fist one to tackle. The Dutch Meteorological Institute (KNMI) releases regularly satellite images of the Dutch sky. Combining those with wind, humidity and other factors, it’s possible to generate reliable precipitation predictions for the coming 120 minutes. You still need to remove noise and other steps, but the process looks roughly like this: The other massive piece of work was the weather forecast up to 14 days in the future. Data was regularly ingested from a third party provider - a German company that has 16.000 weather stations all around the globe. This immense amount of information was processed through a lot of different formulas to output worldwide forecasts, wind information, activity suggestions based on weather and a lot more. It would look like this in the frontend. Both features were built using Scala and Akka (Streams), packaged as a Docker container and deployed on Kubernetes, using mostly Kafka as glue between the different services. Tech Lead At some point at the end of 2016 I was made Tech Lead, and the entire development department reported to me. I stopped coding and became instead more involved in high-level architectural discussions spanning the entire spectrum, from backend services to mobile and web frontends. I became a connection between stakeholders, product owners and developers and actively helped to shape roadmaps based on required development efforts and business value. I did a lot of hiring and people management too, growing the department to 16 developers at its peak, divided in three teams, each one with its own leader. I fought and won some free creative time (a monthly internal event named Freaky Friday). I did my best to foster community engagement within my departments, hosting a few meetup events (I spoke at two of them). This career change definitely took me out of my comfort zone. All of a sudden I was in meetings all day, and ‘time management’ acquired an entire new meaning. Looking back at the experience, I think I did an honest job, but I can also see so many things I could have done differently. Letting go of the technical side wasn’t easy, and I should have definitely delegated more. I worked hard to get my vision realized, while what matters is the team’s vision. I tried to be on top of too many things at the same time, which resulted in a lot of stress. The hardest part was the leader’s loneliness. After being a ‘brogrammer’ throughout my career, in the new role I felt disconnected from the devs in my team, like if an invisible wall was standing right in between. I had to manage a few very difficult cases: a despotic developer with sociopathic traits, a poorly skilled one, making someone redundant (dealing with laws and lawyers), and all the internal conflict that ensued. This all took a toll and after exactly one year I went back to software development. As the saying goes tho, experience is the best teacher. I really have learned a lot from my mistakes and I worked hard on my empathy and compassion. As I write, I am in a leading position again - and I can see the huge difference. I am finally able to put in practice all the good advice by my manager at the time, to delegate and let go, focusing on the longer term wins rather than the daily reward of fixing a bug or adding a feature. I have my side projects for those kinds of tech achievements! Approaching my job with a different perspective lets me embrace both pains & joys of being a leader, and I am actually even having fun! Without the experience at Weeronline I wouldn’t have been able to tackle the current one with this spirit. And I can only be all the more thankful to everyone that I met there, difficult people included. 🙂

2007 to 2012 - TomTom

I worked at TomTom between 2007 and 2012. While doing research on the Internet topology at the University of Amsterdam, I explored opportunities for a PhD and concluded that it wasn’t something I wanted to do. Those few years in the academy felt enough and I was ready to look for something else. After a few job interviews I accepted TomTom’s offer. At that time, growth was really fast and TomTom was hiring about 30 people on a monthly basis! Over the course of 4+ years I covered different roles, mostly in embedded development. The pre-2010 devices worked with a home-grown framework written in C++. In fact, it was the legacy of the very first code written by the TomTom founders back in the 90’s.The core functionality was a loop that would give resources to all necessary components in a round-robin fashion. There were about 25 items in this loop - things like the UI thread, the GPS signal, data connectivity via GPRS, map data and so forth. I personally worked on the user interface, dealing with user clicks, fetching stuff and coloring the screen. A big part of it was collaborating with the UX department, which was sometime joyful and sometimes frustrating 😅 One of my biggest achievements was the modernization of the status bar at the bottom of the navigation screen. Around 2009 we started working on a big project: the UI was moved out of the C++ framework, and rendered instead via a web-like combination of HTML / JavaScript / CSS. Because of my experience, I sat in the middle, working on the interface that would connect the two parts. The result was a UI that looked fresh, came with some ‘free’ features (for instance, animations became a lot easier) and was easier to innovate on. As it turned out, this implementation was short-lived. After releasing a few devices, some new management decided to switch the entire thing to a custom version of Android. In the meantime, TomTom had secured a few big automotive contracts to build “infotainment” systems that would come with the car. The last project I worked on was the Bluetooth connectivity to enable hands-free calling, music through the car stereo and things like that. Being one of the first Android iterations, the whole thing was powered by Java - version 6 I believe. Tech aspects aside, TomTom is one of my best professional experiences to date. It was that magical phase where youth and novelty made every atom in my body love the company and my colleagues. I have wonderful memories of TomTom. It was my first experience in a young, vibrant company in a new country. Some of my closest friends in Amsterdam come from that period. I even ran the charity Dam Tot Damloop 2008 with the company! That particular experience was actually insane. In my young optimism, I hadn’t trained for a single minute before the run – I just showed up. This is what I wrote to my friends afterwards. I could run something more than 10 km, after which I’ve been mostly walking with a left leg unwilling to cooperate any further. A good thing is I had a running mate: an English guy who also hadn’t trained at all. Those are excerpts of our conversations at different stages: 0 km, countdown to start: “good luck!!! how do you say in italian?” - “in bocca al lupo” - “ok, een bowcah aw loopow!” 1 km (out of the ij tunnel): “we see the light at the end of the tunnel!!!” 3 km, total excitement: “hey this is going good man, we can make it!” 5 km: “wow all these people cheering are motivating. maybe we can make it” 7 km: “how are you man?” - “i’m in pain. how about you?” 8.5 km, pain rises and questions about physical details too: “do you feel your stomach contracting?” - “yeah. do you feel your heart bumping in your brain?” 9.5 km, desperate comments: “it was pretty crazy of us to enroll” - “right – this is the most stupid thing I’ve done this year” I crossed the line walking like a mutilated penguin and went home with my left knee screaming Italian blasphemies. I could barely walk and I only had a frozen Alaskan codfish to help ease the pain. My sister tried to cheer me up repeating that it was for a good cause. I feel so lucky to have been part of the TomTom family for those years. Some friends still work there after 12 years and I know exactly why.

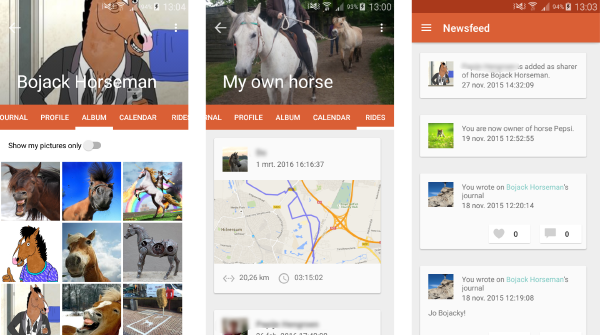

2014 to 2015 - Horsha

Horsha (from ‘Horse’ + ‘Share’) was a startup in stealth-mode which never came out of the stealth-mode. The idea was a social network specialized for horse owners to help them share the care (and the costs) of a horse. Over time, it evolved in a full-blown clone of Facebook, with pictures, events, likes, friends and so forth. I worked there for about a year and was in charge of the Android app, which was coming together quite nicely. During that time, I also started working on one of my most successful open-source projects, Android GPX Parser, a library built specifically for Android to parse the GPX files produced by GPS tracking devices. We needed it for the “share ride” functionality. The project ran for a few years in total, always in stealth-mode, before being finally abandoned without ever seeing the light. I can’t help thinking that by avoiding feature creep and following the most basic startup advice (release fast, iterate fast, fail fast) history could have been different.

2003 to 2007 - Academy Research

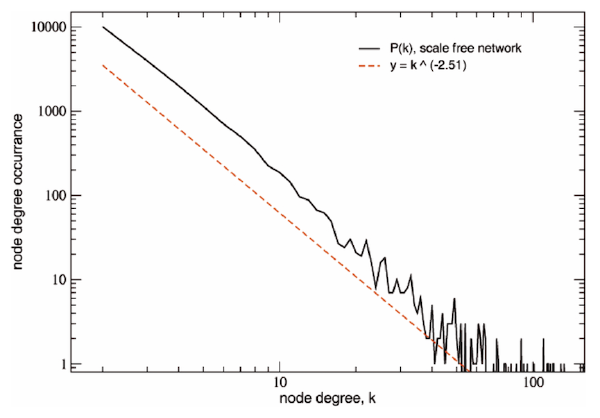

My time in college, studying Telecommunication Engineering at the University of Rome “Tor Vergata”, resulted in some interesting research in the networking field. I was especially interested in topology matters. The actual shape of the Internet - what does it look like? What kind of properties does a network grown without supervision have? What are its pitfalls? Can we do anything to improve it? This is a list of the scientific publications that I authored. Year Publication Journal 2008 Is the topology of the Internet network really fit to its function? Physica A 387 (2008) 1689-1704 2008 Simulation of Data Traffic Dynamics on Large Complex Networks Hpc-Europa Report 2008 Modelling interdependent infrastructures using interacting dynamical models Int. J. Critical Infrastructures 2007 Topological properties of high-voltage electrical transmission networks Electric Power Systems Res., 77 (2007) 99-105 2006 Telecommunication Engineering, Master’s Degree Final Dissertation Printed in 3 copies 2004 Growth mechanisms of the AS-level Internet network Europhys. Lett. 66 (2004) 471 The starting point of the research was a body of work that Barabasi, Strogatz en al. had been working on since the late 90s. Their findings showed that organically grown networks display a degree distribution, where the degree k of a node is the amount of connections between that node and other nodes, which follows a decreasing power law: k^-a. In practice, there will be a few nodes with a very big degree (hubs) and a vast amount of nodes with a very small degree (leaves). Plotted with a logarithmic scale, the distribution looks then approximately linear. Such networks, defined “scale-free” are found in different fields – biology, sociology, tech and much more. For instance, The interactions between proteins in a cell metabolism The contagion network of sexual diseases The autonomous system level of internet gateways When taking into account the flow of information through the network, Scale-Free networks display clear advantages over networks with different distributions. Notably, The average distance between any two nodes tends to be shorter as most shortest-paths will travel through the hub nodes. The degradation of the network’s performance following the removal of random nodes is greatly increased as most nodes are poorly connected. At the same time, such networks are very vulnerable to targeted attacks: the removal of a hub is most likely going to bring the entire network down and compromise its functioning - both globally or locally. One such example was clearly observable after the 11th of September 2001 destruction of the Twin Towers in New York: below them, a key node of the optical connection between Europe and the US was destroyed too. As a result, packets crossing the Atlantic Ocean had to find new, longer routes. Which in turn became congested. This was the main context of my research. On the technical side, I used C++ to build a simulator that could build a network, let traffic flow through it (with different routing strategies) and analyze the network performance and behaviours. I just looked at the code for the first time in about 14 years, and it was obviously shocking and embarrassing. Were I to do this research again, oh boy, it would look so different.

2013 to 2014 - CloudMatch

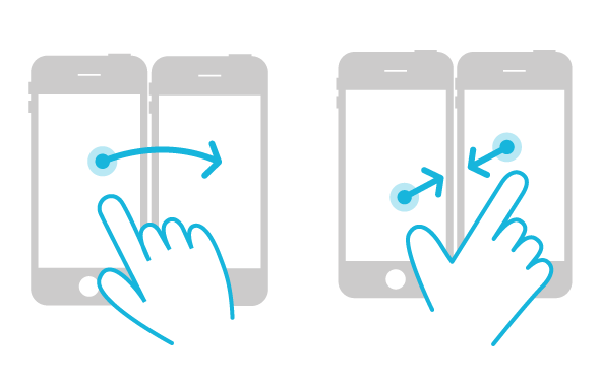

The Idea Cloud-Native Architecture Server Implementation Mobile Clients The Idea CloudMatch was a side-project that I tried building a company around. Born along the lines of the mobile revolution, the idea was simple: enable cross-gesture communication between two touchscreen devices. The development of this product is the reason why I discovered Scala, Akka and the ecosystem of reactive systems that I fell in love with. Business-wise, we envisioned a lot of different use-cases and closed a few fun collaborations - we even had one paying customer!- but no use-case was compelling enough to sustain a proper business. Ultimately, unable to find a good product-market fit, we abandoned the idea and moved on. Some ideas that we explored: Banking sector (money transfer) Exchange of pictures / business card / social contacts / used items Educational games Videogames (the only paying customer) These two videos show a couple of use-cases that we built. What remains is an incredible roller-coaster experience and a very solid technical achievement. One of my most successful conference talk ever was based on the CloudMatch engine that I built for scalability and resilience. Cloud-Native Architecture The engine has two main goals: establish a link between the matched devices allow a fast channel for data exchange While there would be ways to make two devices communicate directly with one another, the most generic solution would be to have a common service facilitating these operations. This would allow the magic to happen in the widest range of situations, whether devices are on WiFi or mobile data, bluetooth, or any other form of connectivity. The question is then – what should this service look like? For starters, we need this service to scale A LOT. Millions of concurrent users will use our system, we know that. We need to embrace distribution. The basic choice is thus between a stateless or a stateful service, where the first choice implies delegating state to a persistence layer. This would quickly become a nightmare of reads and writes - incredibly slow and incredibly expensive too. A stateful service seems the only viable architecture. One where two devices can live on any instance of the distributed service and communicate directly, like illustrated in the below-right image. We need to link devices. How? A stateful service allows direct communication between devices. Server Implementation Akka is a toolkit for the JVM that makes building such distributed services its very mission, and that is how I first came to learn about its existence. Actors are the fundamental unit of computation in Akka: stateful entities who live in memory and can message each other directly. Akka provides a powerful abstraction that makes this possible even when two actors are on different nodes on different physical machines. This is exactly what my engine needs. Each device using CloudMatch is represented by an Actor, which keeps track of its state. For example, whether it’s paired to another device: this Scala code snippet shows how an actor stores the reference to the actor representing the matched device when receiving a message with such information. var matchedDevice: Option[ActorRef] = None override def receive: Receive = { case YouMatchedWith(device) => matchedDevice = Some(device) logMatched(self, device) } A complete working version of this product is available on GitHub. This product has also being the foundation for a series of talks I did back in 2018, together with Adam Sandor, on how Akka is the perfect application-level companion for Kubernetes. Lightbend, the company behind Akka, invited me to give it as a webinar on their official channel. Mobile Clients Any app that wanted to use the CloudMatch features could do so using the CloudMatch SDK, an open-source library for both iOS and Android. Here is some more memorabilia from back then to close this portfolio entry. Promotional material for Swipic.

2001 to 2006 - Litfiba Official Website

The greatest Italian rock band of all times offered me the position of Webmaster for their official website back in 2001. This happened as I had created a fan-page about the band a few years earlier. I enthusiastically took the role and initially maintained their existing website, until the point where a new one was due. I took care of both design and development; for the latter I chose Macromedia Flash and coded everything in ActionScript 1.0. The result was something that looked incredible, had smooth animations everywhere and loaded very fast. In fact the animation were such a core part of it that I decided to record a screencast to show what it looked like. To me, the website looks awesome to these days and fully passed the test of time! The website design was heavily inspired by my Telecommunication Engineering studies at the time. Electric symbols or circuitry are a recurring element. Usability was the main issue. The website didn’t look like anything else around at the time and therefore it was difficult to navigate at first look. From a business perspective, it was also quite a niche technology, while most websites were HTML tables with some JS sprinkled on top and powered by PHP backends. I still have the .swf compiled files, but the source .fla files are no longer supported by Adobe I couldn’t find a way to change them again. I worked there for a total of five years, which mostly overlapped my college studies. During the first year, 2001, I had a contract with EMI Music.

1998 to 2001 - Litfinternet

My first website ever. Source code on GitHub Still alive Website Back in 1998 I was 16 and the World Wide Web was a new thing. I was also in love with the greatest Italian rock band of all times, named Litfiba. My nickname was Shark. I decided to create a website about the band. The site would be called “Litfinternet”, from Litfiba + Internet. I learned HTML from some weekly guides sold with the Italian newspaper “La Repubblica”. I remember writing it all by hand in Windows 95’s Notepad. No CSS, no Javascript, no PHP. Only HTML. This is what the resulting HTML looked like. No indentation, all caps. <HTML> <HEAD><TITLE>Litfiba</TITLE></HEAD> <BODY bgcolor=#553E37 link=#FF8040 vlink=#FFFF00> <IMG HSPACE=110 HSPACE=30 VSPACE=30 HEIGHT=45% WEIGHT=45% ALIGN=RIGHT SRC="../foto/Litfiba.jpg"> <H2>Litfiba (1982)</H2> <UL> <LI>Guerra <LI>Luna <LI>Under the Moon <LI>Man In Suicide </UL> <P><STRONG>Mini Recensione:</STRONG> Bè ragazzi, qui si chiede davvero troppo, anche per un serio appassionato come me, ma che purtroppo non può considerarsi un collezionista vero e proprio. Quest'album è introvabile nei normali ritrovi della musica (ergo negozi di musica), e penso che l'unico posto dove sia reperibile siano i "mercati dei collezionisti", dove la gente scambia, vende e compra musica "antica" per collezionismo. Da quel che ne so il prezzo è superiore al milione, e credo che in giro ce ne siano pochissime copie, considerando che a quel tempo gli unici fan della band saranno stati (forse) i genitori di Piero e Ghigo. Guerra e Luna sono stati riproposti, rispettivamente in Desaparecido e Litfiba Live, ma non so quanto possano questi essere diversi, non ne ho veramente la più pallida idea. Potrei ipotizzare che, considerando che nei primi anni '80 i rimaneggiamenti live non erano come quelli di adesso, Luna di quest'album non sia tanto diversa da quella in Litfiba Live, se a questa si esclude il parlato con l'eco di Piero (...sarò re e dittatore...vi schiaccerò come delle merde...la massa è una merda etc etc.(che bei tempi!)). Comunque, pregherei i visitatori di questo sito in possesso di tale album di scrivermi un'<A HREF="mailto:tirix@tin.it">email cliccando qui</A>, dandomi la risposta ai quesiti lasciati in sospeso in questa mini recensione, e dicendomi anche di che parlano le ultime tre canzoni... VI PREGO!</P> <CENTER> <CENTER><A HREF="album.htm">Ritorno all'indice</A></CENTER> </CENTER> Beside coding, I was also designer and illustrator for this website. Back then it was called ‘WebMaster’. The 90’s naivety and tackiness are in full swing. An Italian ISP named Tiscali was my host of choice: to their absolute credit, the website is still up and running and is still fully functional, with the notable exception of a few dynamic services powered by Bravenet, such as a visitor counter and a PHP forum added much later. The forum became the most important online platform for fans of the band, especially in crucial times when the band split. A lot of work went into moderating the forum, and despite the earlier trolls (unforgettable people such as Asterix, or a certain Robbie that was an absolute toxic presence), good relationships were forged and it was a lot of fun. Here’s one final message I posted when I shut it down later down the road. So many memories.